Applying Metrics in Regression Test (or Waterfall)

As a leader within your organization, have you ever said or thought one of the following statements?

“Every time we release new features, we break others.”

“We’ve had some major production bugs that have cost us dearly.”

“Our quality is very inconsistent.”

If yes, then your organization could benefit from a Metric-Based Test Methodology. You might be asking yourself, “Why should we measure software testing? That seems like a lot of unnecessary work that I don’t have time for.” Honestly, it’s the old adage — “You can’t manage what you don’t measure.” And trust us, this additional work will pay off in the long run by preventing the 3 Perils of Software Development: Defects, Delays, and Dollars.

We know implementing a Metric-Based Test Methodology can be easier said than done. So, where is a good place to start? Once you have identified your testing team’s maturity level (see part 1 in this blog series), we recommend that you begin by building a data-driven test plan, then collect key metrics in your regression test to validate/improve upon your future test plans. This same approach works very well whether you are doing a regression test prior to major releases, or you have teams working in Waterfall or “Wagile.” Start with the easy and obvious, then move toward the more complex and sophisticated metrics.

Metric-Based Test Planning

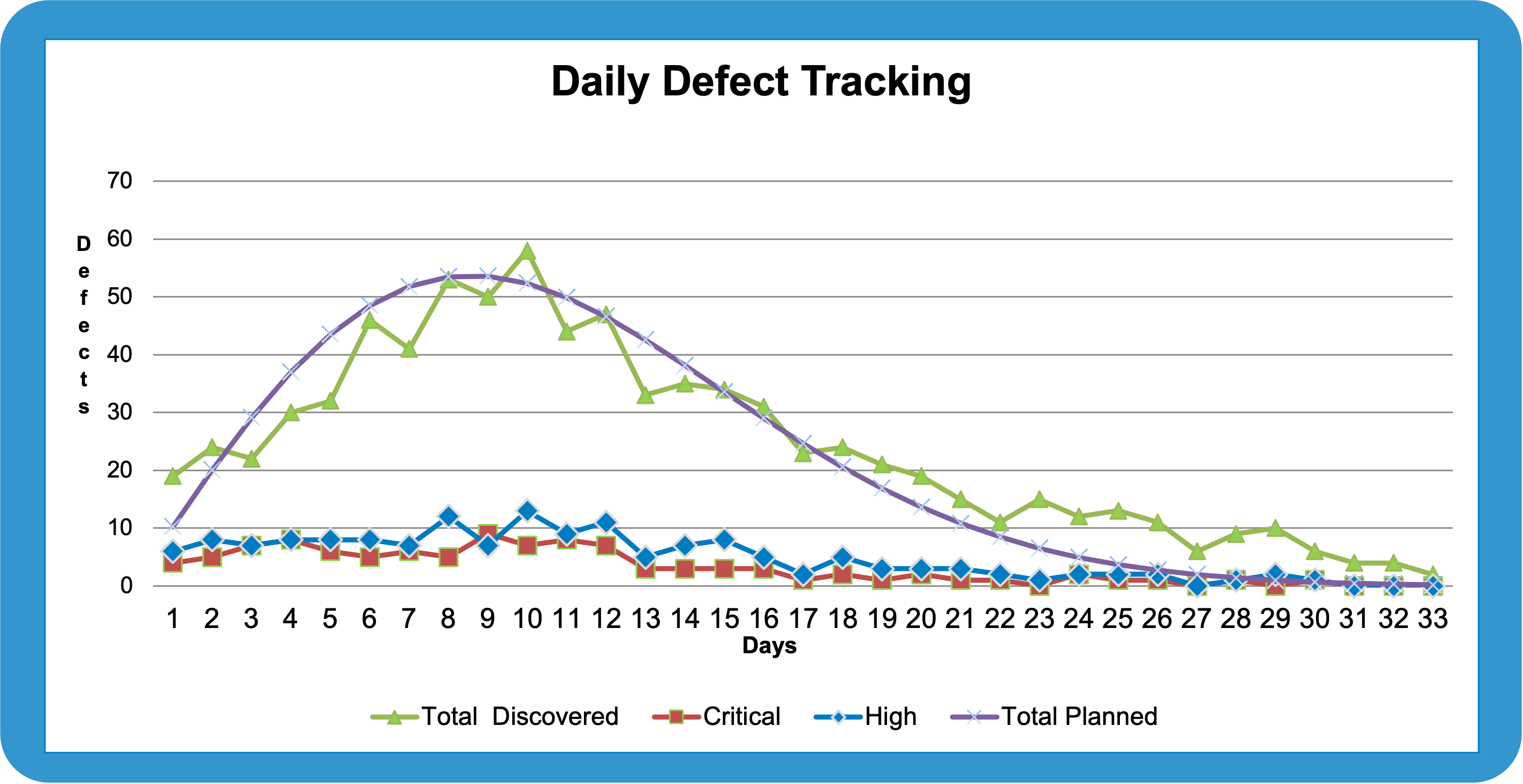

When your software team is knee deep in the testing process, how do you know if they’re meeting, exceeding, or falling short of their testing plan? If you’re running behind schedule, are you able to identify the root cause? Without a metrics-based plan, testers typically lack defined exit criteria—making it exceedingly difficult for them to determine when testing should actually be finished.

Applying metrics in regression test planning allows you to be proactive rather than reactive. It provides you with the necessary information needed to accurately scope your test effort, measure quality across all releases and products, estimate the # of test cases, increase team productivity, and track the efficiency and effectiveness of your team over multiple test cycles.

So, what specific metrics should you measure?

Key Metrics

Here at Lighthouse, we help software development teams better measure and manage their performance by dividing metrics into four quadrants: Test Progress, Product Quality, Test Efficiency, and Test Effectiveness.

1. Test Progress Metrics

Think of these as the project management metrics for the test team. We planned to take x days to write our test cases, but we actually took y days. Being able to see how you are doing against your plan allows you to learn, adjust, and continue to improve over time. This valuable insight will enable you to create more accurate plans in the future, rather than just guessing how much time, money, and effort a project will take.

2. Quality Metrics

These metrics indicate the quality of the work product. Most teams track some quality metrics, but an additional one that we suggest measuring is Defect Removal Efficiency (DRE). This shows you how well your development and testing team does at removing defects before they are released into production. Another metric that we recommend, “Defects by Component” requires a slight bit more work, but it often has immense value. This is an important metric that we track as it allows you to easily identify which areas of the system are most fragile, and then investigate its root cause.

3. Test Efficiency Metrics

Efficiency means how quick (or expensive) we are at performing the testing tasks. For example, to track the efficiency of your testing writing, we recommend measuring Planned vs Actual effort to write test cases. This is best measured as an aggregate across the entire testing team. Graphing this daily will prevent your team from getting too far off course before you can intervene and provide additional help. It is a simple project management graph that can have immediate and significant impact on your ability to coach your team and build a better test plan.

4. Test Effectiveness Metrics

Effectiveness measures how good of a job we are doing. Here at Lighthouse, we use a couple of key effectiveness metrics. One great example is Mean Time to Defect (MTTD) which measures the total time it’s taking the entire test team to find defects. In the beginning when the system is loaded with bugs, your team may find a defect every 30-45 minutes. As the system becomes more stable, it should take your team longer to find bugs. This metric is extremely insightful as it tells you how often users will find bugs once the product goes to market (Pro Tip: we recommend having an exit criterion of 8 to 10 hours so your initial users must use a product full-time for a whole day before they might find a bug).

As a Software Leader, encouraging your team to apply a Metric-Based Test Methodology will give you the visibility you need to manage and coach, as well as confidence that your team is on a path to continuously learn, adjust, and improve. This will allow them to stop making guesses and start creating test plans with accurate deadlines and budgets. Measuring in our Key Metric Quadrants will create a continuous feedback loop that will save your organization both time and money. But remember, start simple. You can always add more metrics later.

To learn more about how you can improve your organization’s software quality and keep your projects on course, keep an eye out for the rest of this blog series – The Benefits of having a Metric-Based Test Methodology.

- Testing Process Maturity – Complete

- Applying Metrics in Regression Test (or Waterfall) – Complete

- Applying Metrics in Agile

Contact us to learn more or Register Now to attend our webinar on Wednesday, July 20 at Noon (EDT) where we will be discussing the entire series. We hope to see you there!